MVAPICH2 2.3.7 User Guide

MVAPICH Team

Network-Based Computing Laboratory

Department of Computer Science and Engineering

The Ohio State University

http://mvapich.cse.ohio-state.edu

Copyright (c) 2001-2022

Network-Based Computing Laboratory,

headed by Dr. D. K. Panda.

All rights reserved.

Last revised: March 2, 2022

InfiniBand, Omni-Path, Ethernet/iWARP RDMA over Converged Ethernet (RoCE), Slingshot 10, and Rockport Networks are high-performance networking technologies to deliver low latency and high bandwidth. They are also achieving widespread acceptance due to their open standards.

MVAPICH (pronounced as “em-vah-pich”) is an open-source MPI software to exploit the novel features and mechanisms of these networking technologies and deliver best performance and scalability to MPI applications. This software is developed in the Network-Based Computing Laboratory (NBCL), headed by Prof. Dhabaleswar K. (DK) Panda.

The MVAPICH2 MPI library supports MPI-3 semantics. This open-source MPI software project started in 2001 and a first high-performance implementation was demonstrated at SuperComputing ’02 conference. After that, this software has been steadily gaining acceptance in the HPC, InfiniBand, Omni-Path, Ethernet/iWARP and RoCE communities. As of March 2022, more than 3,200 organizations (National Labs, Universities and Industry) world-wide (in 89 countries) have registered as MVAPICH users at MVAPICH project web site. There have also been more than 1.56 million downloads of this software from the MVAPICH project site directly. In addition, many InfiniBand, Omni-Path, Ethernet/iWARP and RoCE vendors, server vendors, systems integrators and Linux distributors have been incorporating MVAPICH2 into their software stacks and distributing it. MVAPICH2 distribution is available under BSD licensing.

Several InfiniBand systems using MVAPICH2 have obtained positions in the TOP 500 ranking. The Nov ’21 list includes the following systems: 4th, 10,649,600-core (Sunway TaihuLight) at National Supercomputing Center in Wuxi, China; 13th, 448,448 cores (Frontera) at TACC; 26th, 391,680 cores (ABCI) in Japan; 38st, 570,020 cores (Neurion) in South Korea; 39nd, 556,104 cores (Oakforest-PACS) in Japan; 44th, 367,024 cores (Stampede2) at TACC.

More details on MVAPICH software, users list, mailing lists, sample performance numbers on a wide range of platforms and interconnects, a set of OSU benchmarks, related publications, and other InfiniBand-, RoCE, Omni-Path, and iWARP-related projects (High-Performance Big Data and High-Performance Deep Learning) can be obtained from our website:http://mvapich.cse.ohio-state.edu.

This document contains necessary information for MVAPICH2 users to download, install, test, use, tune and troubleshoot MVAPICH2 2.3.7. We continuously fix bugs and update update this document as per user feedback. Therefore, we strongly encourage you to refer to our web page for updates.

This guide is designed to take the user through all the steps involved in configuring, installing, running and tuning MPI applications over InfiniBand using MVAPICH2 2.3.7.

In Section 3 we describe all the features in MVAPICH2 2.3.7. As you read through this section, please note our new features (highlighted as (NEW)) compared to version 2.3.6. Some of these features are designed in order to optimize specific type of MPI applications and achieve greater scalability. Section 4 describes in detail the configuration and installation steps. This section enables the user to identify specific compilation flags which can be used to turn some of the features on or off. Basic usage of MVAPICH2 is explained in Section 5. Section 6 provides instructions for running MVAPICH2 with some of the advanced features. Section 7 describes the usage of the OSU Benchmarks. In Section 8 we suggest some tuning techniques for multi-thousand node clusters using some of our new features. If you have any problems using MVAPICH2, please check Section 9 where we list some of the common problems people face. Finally, in Sections 11 and 12, we list all important run time parameters, their default values and a short description.

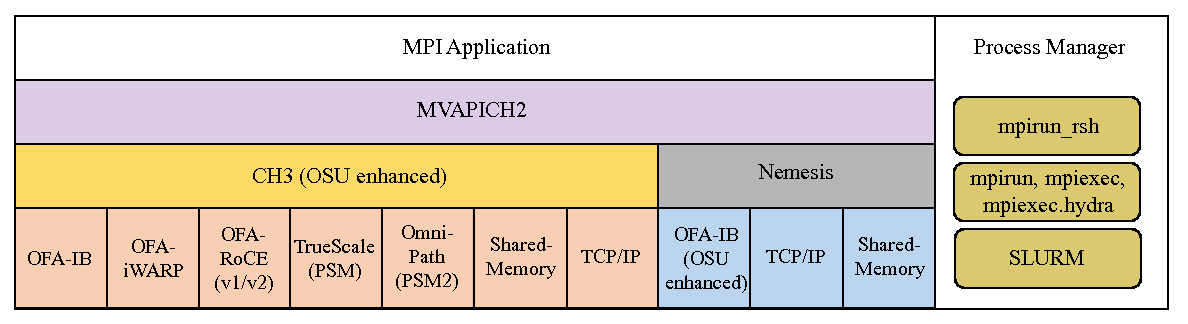

MVAPICH2 (MPI-3 over InfiniBand) is an MPI-3 implementation based on MPICH ADI3 layer. MVAPICH2 2.3.7 is available as a single integrated package (with MPICH 3.2.1). The current release supports ten different underlying transport interfaces, as shown in Figure 1.

MVAPICH2 2.3.7 is compliant with MPI 3 standard. In addition, MVAPICH2 2.3.7 provides support and optimizations for NVIDIA GPU, multi-threading and fault-tolerance (Checkpoint-restart, Job-pause-migration-resume). A complete set of features of MVAPICH2 2.3.7 are indicated below. New features compared to v2.2 are indicated as (NEW).

The MVAPICH2 2.3.7 package and the project also includes the following provisions:

The MVAPICH2 installation process is designed to enable the most widely utilized features on the target build OS by default. The other interfaces, as indicated in Figure 1, can also be selected on Linux. This installation section provides generic instructions for building from a tarball or our latest sources.

In order to obtain best performance and scalability while having flexibility to use a large number of features, the MVAPICH team strongly recommends the use of following interfaces for different adapters: 1) OFA-IB-CH3 interface for all Mellanox InfiniBand adapters, Cray Slingshot 10 or lower, and Rockport Networks hardware, 2) TrueScale (PSM-CH3) interface for all Intel InfiniBand adapters, 3) OFA-RoCE-CH3 interface for all RoCE adapters, 4) OFA-iWARP-CH3 for all iWARP adapters and 5) Shared-Memory-CH3 for single node SMP system and laptop.

Please see the appropriate subsection for specific configuration instructions for the interface-adapter you are targeting.

The MVAPICH2 2.3.7 source code package includes MPICH 3.2.1. All the required files are present as a

single tarball. Download the most recent distribution tarball from:

http://mvapich.cse.ohio-state.edu/downloads

Unpack the tarball and use the standard GNU procedure to compile:

$ tar -xzf mvapich2-2.3.7.tgz

$ cd mvapich2-2.3.7

$ ./configure

$ make

$ make install

We now support parallel make and you can use the -j<num threads> option to speed up the build process. You can use the following example to spawn 4 threads instead of the preceding make step.

$ make -j 4

In order to install a debug build, please use the following configuration option. Please note that using debug builds may impact performance.

$ ./configure --enable-g=all --enable-error-messages=all

$ make

$ make install

These instructions assume you have already installed subversion.

The MVAPICH2 SVN repository is available at:

https://scm.nowlab.cse.ohio-state.edu/svn/mpi/mvapich2/

Please keep in mind the following guidelines before deciding which version to check out:

$ svn co

https://scm.nowlab.cse.ohio-state.edu/svn/mpi/mvapich2/tags/2.3.7

mvapich2

$ svn co https://scm.nowlab.cse.ohio-state.edu/svn/mpi/mvapich2/trunk

mvapich2

The mvapich2 directory under your present working directory contains a working copy of the MVAPICH2 source code. Now that you have obtained a copy of the source code, you need to update the files in the source tree:

$ cd mvapich2

$ ./autogen.sh

This script will generate all of the source and configuration files you need to build MVAPICH2. You will need autoconf version >= 2.67, automake version >= 1.12.3, libtool version >= 2.4

$ ./configure

$ make

$ make install

MVAPICH2 provides the mpirun_rsh/mpispawn framework from MVAPICH distribution. Using mpirun_rsh should provide the fastest startup of your MPI jobs. More details can be found in Section 5.2.1. In addition, MVAPICH2 also includes the Hydra process manager from MPICH-3.2.1. For more details on using Hydra, please refer to Section 5.2.2.

By default, mpiexec uses the Hydra process launcher. Please note that neither mpirun_rsh, nor Hydra require you to start daemons in advance on the nodes used for a MPI job. Both mpirun_rsh and Hydra can be used with any of the eight interfaces of this MVAPICH2 release, as indicated in Figure 1.

Usage: ./configure [OPTION]... [VAR=VALUE]...

To assign environment variables (e.g., CC, CFLAGS...), specify them as VAR=VALUE. See below for descriptions of some of the useful variables.

| RSH_CMD | path to rsh command |

| SSH_CMD | path to ssh command |

| ENV_CMD | path to env command |

| DBG_CMD | path to debugger command |

| XTERM_CMD | path to xterm command |

| SHELL_CMD | path to shell command |

| TOTALVIEW_CMD | path to totalview command |

If you’d like to use SLURM to launch your MPI programs please use the following configure options.

To configure MVAPICH2 to use PMI-1 support in SLURM:

$ ./configure --with-pmi=pmi1 --with-pm=slurm

To configure MVAPICH2 to use PMI-2 support in SLURM:

$ ./configure --with-pmi=pmi2 --with-pm=slurm

To configure MVAPICH2 to use PMIx support in SLURM:

$ ./configure --with-pmi=pmix --with-pm=slurm

MVAPICH2 automatically detects and uses PMI extensions if available from the process manager. To build and install SLURM with PMI support, please follow these steps:

Download the SLURM source tarball for SLURM-15.08.8 from

http://slurm.schedmd.com/download.html.

Download the patch to add PMI Extensions in SLURM from

http://mvapich.cse.ohio-state.edu/download/mvapich/osu-shmempmi-slurm-15.08.8.patch.

$ tar -xzf slurm-15.08.8.tar.gz

$ cd slurm-15.08.8

$ patch -p1 < osu-shmempmi-slurm-15.08.8.patch

$ ./configure --prefix=/path/to/slurm/install

--disable-pam

$ make -j4 && make install && make install-contrib

To configure MVAPICH2 with the modified SLURM, please use:

$ ./configure --with-pm=slurm --with-pmi=pmi2 --with-slurm=/path/to/slurm/install

MVAPICH2 can also be configured with PMIx plugin of SLURM:

$ ./configure --with-pm=slurm --with-pmi=pmix --with-pmix=/path/to/pmix/install

Note that –with-pmix should refer to the pmix/install directory that is used to build SLURM.

Please refer to Section 5.2.3 for information on how to run MVAPICH2 using SLURM.

MVAPICH2 supports PMIx extensions for JSM. To configure MVAPICH2 with PMIx plugin of JSM, please use:

$ ./configure --with-pm=jsm --with-pmi=pmix --with-pmix=/path/to/pmix/install

Note that –with-pmix should refer to the pmix/install directory that is used to build JSM.

MVAPICH2 can also use pmi4pmix library to support JSM. It can be configured as follows:

$ ./configure --with-pm=jsm --with-pmi=pmi4pmix --with-pmi4pmix=/path/to/pmi4pmix/install

Please refer to Section 5.2.5 for information on how to run MVAPICH2 using the Jsrun launcher.

To configure MVAPICH2 with Flux support, please use:

$ ./configure --with-pm=flux --with-flux=/path/to/flux/install

Please refer to Section 5.2.6 for information on how to run MVAPICH2 using Flux.

OpenFabrics (OFA) IB/iWARP/RoCE/Slingshot/Rockport with the CH3 channel is the default interface on Linux. It can be explicitly selected by configuring with:

$ ./configure --with-device=ch3:mrail --with-rdma=gen2

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:mrail --with-rdma=gen2 --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:mrail --with-rdma=gen2 --enable-g=dbg --enable-debuginfo

Configuration Options for OpenFabrics IB/iWARP/RoCE/Slingshot/Rockport

The Berkeley Lab Checkpoint/Restart (BLCR) installation is automatically detected if installed in

the standard location. To specify an alternative path to the BLCR installation, you can either

use:

--with-blcr=<path/to/blcr/installation>

or

--with-blcr-include=<path/to/blcr/headers>

--with-blcr-libpath=<path/to/blcr/library>

The Filesystem in Userspace (FUSE) installation is automatically detected if installed in the

standard location. To specify an alternative path to the FUSE installation, you can either

use:

--with-fuse=<path/to/fuse/installation>

or

--with-fuse-include=<path/to/fuse/headers>

--with-fuse-libpath=<path/to/fuse/library>

SCR caches checkpoint data in storage on the compute nodes of a Linux cluster to provide a fast, scalable checkpoint / restart capability for MPI codes.

The Fault Tolerance Backplane (FTB) installation is automatically detected if installed in the

standard location. To specify an alternative path to the FTB installation, you can either

use:

--with-ftb=<path/to/ftb/installation>

or

--with-ftb-include=<path/to/ftb/headers>

--with-ftb-libpath=<path/to/ftb/library>

This section details the configuration option to enable GPU-GPU communication with the OFA-IB-CH3 interface of the MVAPICH2 MPI library. For more options on configuring the OFA-IB-CH3 interface, please refer to Section 4.4.

The CUDA installation is automatically detected if installed in the standard location. To specify an

alternative path to the CUDA installation, you can either use:

--with-cuda=<path/to/cuda/installation>

or

--with-cuda-include=<path/to/cuda/include>

--with-cuda-libpath=<path/to/cuda/libraries>

In addition to these we have added the following variables to help account for libraries being installed

in different locations:

--with-libcuda=<path/to/directory/containing/libcuda>

--with-libcudart=<path/to/directory/containing/libcudart

Note: If using the PGI compiler, you will need to add the following to your CPPFLAGS and CFLAGS. You’ll also need to use the --enable-cuda=basic configure option to build properly. See the example below.

The support for running jobs across multiple subnets in MVAPICH2 can be enabled at configure time as follows:

$ ./configure --enable-multi-subnet

MVAPICH2 relies on RDMA_CM module to establish connections with peer processes. The RDMA_CM modules shipped some older versions of OFED (like OFED-1.5.4.1), do not have the necessary support to enable communication across multiple subnets. MVAPICH2 is capable of automatically detecting such OFED installations at configure time. If the OFED installation present on the system does not support running across multiple subnets, the configure step will detect this and exit with an error message.

The default CH3 channel provides native support for shared memory communication on stand alone multi-core nodes that are not equipped with InfiniBand adapters. The steps to configure CH3 channel explicitly can be found in Section 4.4. Dynamic Process Management (5.2.7) is currently not supported on stand-alone nodes without InfiniBand adapters.

The Nemesis sub-channel for OFA-IB is now deprecated. It can be built with:

$ ./configure --with-device=ch3:nemesis:ib

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:nemesis:ib --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:nemesis:ib --enable-g=dbg --enable-debuginfo

Configuration options for OFA-IB-Nemesis:

The Berkeley Lab Checkpoint/Restart (BLCR) installation is automatically detected if installed in

the standard location. To specify an alternative path to the BLCR installation, you can either

use:

--with-blcr=<path/to/blcr/installation>

or

--with-blcr-include=<path/to/blcr/headers>

--with-blcr-libpath=<path/to/blcr/library>

The TrueScale (PSM-CH3) interface needs to be built to use MVAPICH2 on Intel TrueScale adapters. It can built with:

$ ./configure --with-device=ch3:psm

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:psm --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:psm --enable-g=dbg --enable-debuginfo

Configuration options for Intel TrueScale PSM channel:

The Omni-Path (PSM2-CH3) interface needs to be built to use MVAPICH2 on Intel Omni-Path adapters. It can built with:

$ ./configure --with-device=ch3:psm

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:psm --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:psm --enable-g=dbg --enable-debuginfo

Configuration options for Intel Omni-Path PSM2 channel:

The use of TCP/IP with Nemesis channel requires the following configuration:

$ ./configure --with-device=ch3:nemesis

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:nemesis --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:nemesis --enable-g=dbg --enable-debuginfo

Additional instructions for configuring with TCP/IP-Nemesis can be found in the MPICH documentation available at: http://www.mcs.anl.gov/research/projects/mpich2/documentation/index.php?s=docs

The use of TCP/IP requires the explicit selection of a TCP/IP enabled channel. The recommended channel is TCP/IP Nemesis (described in Section 4.13). The alternative ch3:sock channel can be selected by configuring with:

$ ./configure --with-device=ch3:sock

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:sock --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:sock --enable-g=dbg --enable-debuginfo

Additional instructions for configuring with TCP/IP can be found in the MPICH documentation available at:

http://www.mpich.org/documentation/guides/

MVAPICH2 supports a unified binary for both OFA and TCP/IP communication through the Nemesis interface.

In order to configure MVAPICH2 for unified binary support, perform the following steps:

$ ./configure --with-device=ch3:nemesis:ib,tcp

You can use mpicc as usual to compile MPI applications. In order to run your application on OFA:

$ mpiexec -f hosts ./a.out -n 2

To run your application on TCP/IP:

$ MPICH_NEMESIS_NETMOD=tcp mpiexec -f hosts ./osu_latency -n 2

The use of Nemesis shared memory channel requires the following configuration.

$ ./configure --with-device=ch3:nemesis

Both static and shared libraries are built by default. In order to build with static libraries only, configure as follows:

$ ./configure --with-device=ch3:nemesis --disable-shared

To enable use of the TotalView debugger, the library needs to be configured in the following manner:

$ ./configure --with-device=ch3:nemesis --enable-g=dbg --enable-debuginfo

Additional instructions for configuring with Shared-Memory-Nemesis can be found in the MPICH

documentation available at:

http://www.mcs.anl.gov/research/projects/mpich2/documentation/index.php?s=docs

MVAPICH2 can be configured and installed with Singularity in the following manner. Note that the following prerequisites must be fulfilled before this step.

Sample Configuration and Installation with Singularity

MVAPICH2 can be configured and installed with Spack. For detailed instruction of installing MVAPICH2

with Spack, please refer to Spack userguide in:

http://mvapich.cse.ohio-state.edu/userguide/userguide_spack/

MVAPICH2 provides a variety of MPI compilers to support applications written in different programming languages. Please use mpicc, mpif77, mpiCC, or mpif90 to compile applications. The correct compiler should be selected depending upon the programming language of your MPI application.

These compilers are available in the MVAPICH2_HOME/bin directory. MVAPICH2 installation directory can also be specified by modifying $PREFIX, then all the above compilers will also be present in the $PREFIX/bin directory.

This section provides instructions on how to run applications with MVAPICH2. Please note that on new multi-core architectures, process-to-core placement has an impact on performance. Please refer to Section 6.5 to learn about running MVAPICH2 library on multi-core nodes.

The MVAPICH team suggests users using this mode of job start-up for all interfaces (including OFA-IB-CH3, OFA-IB-Nemesis, OFA-iWARP-CH3, OFA-RoCE-CH3, TrueScale (PSM-CH3), Omni-Path (PSM2-CH3), Shared memory-CH3, TCP/IP-CH3 and TCP/IP-Nemesis) This mpirun_rsh scheme provides fast and scalable job start-up. It scales to multi-thousand node clusters.

Prerequisites:

Examples of running programs using mpirun_rsh:

$ mpirun_rsh -np 4 n0 n0 n1 n1 ./cpi

This command launches cpi on nodes n0 and n1, two processes per node. By default ssh is used.

$ mpirun_rsh -launcher rsh -np 4 n0 n0 n1 n1 ./cpi

This command launches cpi on nodes n0 and n1, two processes per each node using rsh instead of ssh.

$ mpirun_rsh -launcher srun -np 4 n0 n0 n1 n1 ./cpi This command launches cpi on nodes n0 and n1, two processes per each node using srun instead of ssh. This is usefull on clusters that do not support direct ssh access to compute nodes.

MPIRUN_RSH Hostfile:

$ mpirun_rsh -np 4 -hostfile hosts ./cpi

A list of target nodes may be provided in the file hosts one per line. MPI ranks are assigned in order of the hosts listed in the hosts file or in the order they are passed to mpirun_rsh. i.e., if the nodes are listed as n0 n1 n0 n1, then n0 will have two processes, rank 0 and rank 2; whereas n1 will have rank 1 and 3. This rank distribution is known as “cyclic”. If the nodes are listed as n0 n0 n1 n1, then n0 will have ranks 0 and 1; whereas n1 will have ranks 2 and 3. This rank distribution is known as “block”. A cyclic (one entry per host) hostfile can be modified with the ppn launch option to use a block distribution.

Hostfile Format

The mpirun_rsh hostfile format allows for users to specify hostnames, one per line, optionally with a

multiplier, and HCA specification.

The multiplier allows you to save typing by allowing you to specify blocked distribution of MPI ranks using one line per hostname. The HCA specification allows you to force an MPI rank to use a particular HCA.

The optional components are delimited by a ‘:’. Comments and empty lines are also allowed. Comments start with ‘#’ and continue to the next newline.

When launching in a SLURM or PBS environment the hostfile can be read directly from the resource manager. In this case, a combination of the np and ppn launch options are sufficient. The ppn value of 1 will result in a cyclic distribution, while higher ppn values will provide a block distribution. Note that the number of processes specified by np will always be met, the ppn value will just specify the number of processes to assign to each host consecutively.

$ mpirun_rsh -np 8 -ppn 2 ./cpi

This command launches cpi on all nodes allocated to the SLRUM/PBS job assigning rank 0 and rank 1 on n1, rank 2 and rank 3 on n2, and so on. If those are the only nodes in the job n1 will then recieve rank 3 and 4, n2 will recieve rank 5 and 6, etc.

Specifying Environmental Variables

Many parameters of the MPI library can be configured at run-time using environmental variables. In

order to pass any environment variable to the application, simply put the variable names and values just

before the executable name, like in the following example:

$ mpirun_rsh -np 4 -hostfile hosts ENV1=value ENV2=value ./cpi

Note that the environmental variables should be put immediately before the executable.

Alternatively, you may also place environmental variables in your shell environment (e.g. .bashrc). These will be automatically picked up when the application starts executing.

Note that mpirun_rsh is sensitive to the ordering of the command-line arguments.

There are many different parameters which could be used to improve the performance of applications depending upon their requirements from the MPI library. For a discussion on how to identify such parameters, see Section 8.

Job Launch using MPMD

The mpirun_rsh framework also supports job launching using MPMD mode. It permits the use of

heterogeneous jobs using multiple executables and command line arguments. The following format needs

to be used:

$ mpirun_rsh -config configfile -hostfile hosts

A list of different group of executables must be provided to the job launcher in the file configfile, one per line. The configfile can contain comments. Lines beginning with “#” are considered comments.

For example:

#Config file example

#Launch 4 copies of exe1 with arguments arg1 and arg2

-n 4 : exe1 arg1 arg2

#Launch 2 copies of exe2

-n 2 : exe2

A list of target nodes must be provided in the file hosts one per line and the allocation policy previously described is used.

Please note that this section only gives general information on how to run applications using mpirun_rsh. Please refer to the following sections for more information on how to run the application over various interfaces such as iWARP and RoCE.

Other Options

Other options of mpirun_rsh can be obtained using

$ mpirun_rsh --help

Hydra is the default process manager for MPICH. MVAPICH2 also distributes Hydra along with with mpirun_rsh. Hydra can be used either by using mpiexec or mpiexec.hydra. All interfaces of MVAPICH2 will work using Hydra. The following is an example of running a program using it:

$ mpiexec -f hosts -n 2 ./cpi

The Hydra process manager can be used to launch MPMD jobs. For example the following command:

$ mpiexec -genv FOO=1 -env BAR=1 -n 2 ./exec1 : -env BAR=2 -n 2 ./exec2

The environment variable FOO=1 passed to “-genv” is applied the environment to all executables (i.e. exec1 and exec2). The values BAR=1 applies to exec1 and BAR=2 applies to only exec2.

This process manager has many features. Please refer to the following web page for more details.

http://wiki.mcs.anl.gov/mpich2/index.php/Using_the_Hydra_Process_Manager

SLURM is an open-source resource manager designed by Lawrence Livermore National Laboratory and maintained by SchedMD. SLURM software package and its related documents can be downloaded from: http://www.schedmd.com/

Once SLURM is installed and the daemons are started, applications compiled with MVAPICH2 can be launched by SLURM, e.g.

$ srun -n 2 ./a.out

The use of SLURM enables many good features such as explicit CPU and memory binding. For example, if you have two processes and want to bind the first process to CPU 0 and Memory 0, and the second process to CPU 4 and Memory 1, then it can be achieved by:

$ srun --cpu_bind=v,map_cpu:0,4 --mem_bind=v,map_mem:0,1 -n2 --mpi=none ./a.out

To use PMI-2 with SLURM, please use:

$ srun --mpi=pmi2 -n 2 ./a.out

To use PMIx with SLURM, please use:

$ srun --mpi=pmix -n 2 ./a.out

If PMI-2/PMIx is selected and the installed version of SLURM supports PMI/PMIx, MVAPICH2 will automatically use the extensions.

For more information about SLURM and its features please visit http://www.schedmd.com/

Both mpirun_rsh and mpiexec can take information from the PBS/Torque environment to launch jobs (i.e. launch on nodes found in PBS_NODEFILE).

You can also use MVAPICH2 in a tightly integrated manner with PBS. To do this you can install mvapich2 by adding the –with-pbs option to mvapich2. Below is a snippet from ./configure –help of the hydra process manager (mpiexec) that you will use with PBS/Torque.

–with-pbs=PATH specify path where pbs include directory and lib directory can be found –with-pbs-include=PATH specify path where pbs include directory can be found –with-pbs-lib=PATH specify path where pbs lib directory can be found

For more information on using hydra, please visit the following URL: http://wiki.mpich.org/mpich/index.php/Using_the_Hydra_Process_Manager

Job Step Manager (JSM) is job scheduler developed by IBM. To launch MPI applications with jsrun, use the following commands:

$ bsub -nnodes 2 -G guests -W 240 -Is /usr/bin/tcsh

$ jsrun -a 1 -c ALL_CPUS -g ALL_GPUS --bind=none -n 2

./mpiHello

More information about How to use JSM can be found at the following URL: https://www.ibm.com/support/knowledgecenter/en/SSWRJV_10.1.0/jsm/10.2/base/jsm_kickoff.html

Flux is an open-source resource manager developed at Lawrence Livermore National Laboratory. The Flux software package and its related documents can be downloaded from: https://github.com/flux-framework. To allocate a node and setup Flux, the following comands are used:

$ salloc -N1 -p pdebug

$ srun --pty -N 1 -n 1 --mpi=none flux keygen

$ srun --pty -N 1 -n 1 --mpi=none flux start

Once Flux is setp, applications compiled with MVAPICH2 can be launched by Flux as follows:

$ flux wreckrun -N 1 -n 2 -c 1 ./a.out

MVAPICH2 (OFA-IB-CH3 interface) provides MPI-2 dynamic process management. This feature allows MPI applications to spawn new MPI processes according to MPI-2 semantics. The following commands provide an example of how to run your application.

Please refer to Section 11.71 for information about the MV2_SUPPORT_DPM environment variable.

The MVAPICH2 library can automatically detect iWARP cards and use them with the appropriate settings at run time. This feature deprecates the use of the environment variable MV2_USE_IWARP_MODE which was being used earlier to enable the use of iWARP devices at run time.

All the systems to be used need the following one time setup for enabling RDMA CM usage.

Programs can be executed as follows:

$ mpirun_rsh -np 2 n0 n1 prog

The iWARP interface also provides TotalView debugging and shared library support. Please refer to Section 4.4.

RDMA over Converged Ethernet (RoCE) is supported with the use of the run time environment variable MV2_USE_RoCE.

Programs can be executed as follows:

$ mpirun_rsh -np 2 MV2_USE_RoCE=1 prog

RoCE requires loss-less Ethernet fabric. This requires to configure Ethernet switch to treat RoCE traffic as loss-less. A separate VLAN interface needs to be created on RoCE NIC on all compute nodes and assign a private IP address

In loss-less fabric setup, MVAPICH2 can be run in RoCE mode in following two ways

You would like to run an MPI job using IPoIB but your IB card is not the default interface for IP traffic. Assume that you have a cluster setup as the following:

| #hostname | Eth Addr | IPoIB Addr | |

| compute1 | 192.168.0.1 | 192.168.1.1 | |

| compute2 | 192.168.0.2 | 192.168.1.2 | |

| compute3 | 192.168.0.3 | 192.168.1.3 | |

| compute4 | 192.168.0.4 | 192.168.1.4 | |

The Ethernet addresses are assigned to eth0 and the IPoIB addresses are assigned to the ib0 interface. The host names resolve to the 192.168.0.* addresses.

The most direct way to use the IPoIB network is to populate your hosts file with the IP addresses of the ib0 interfaces.

Example:

$ cat - > hosts

192.168.1.1

192.168.1.2

192.168.1.3

192.168.1.4

$ mpirun_rsh -hostfile hosts -n 4 ./app1

or

$ mpiexec -f hosts -n 4 ./app1

Another way to achieve this is to use the -iface option of hydra. This allows you to have your hosts file to use the host names even though they resolve to the eth0 interface.

Example:

$ cat - > hosts

compute1

compute2

compute3

compute4

$ mpiexec -f hosts -iface ib0 -n 4 ./app1

More information can be found at the following link.

MVAPICH2 contains optimized Lustre ADIO support for the OFA-IB-CH3 interface. The Lustre directory should be mounted on all nodes on which MVAPICH2 processes will be running. Compile MVAPICH2 with ADIO support for Lustre as described in Section 4. If your Lustre mount is /mnt/datafs on nodes n0 and n1, on node n0, you can compile and run your program as follows:

$ mpicc -o perf romio/test/perf.c

$ mpirun_rsh -np 2 n0 n1 <path to perf>/perf -fname /mnt/datafs/testfile

If you have enabled support for multiple file systems, append the prefix “lustre:” to the name of the file. For example:

$ mpicc -o perf romio/test/perf.c

$ mpirun_rsh -np 2 n0 n1 ./perf -fname lustre:/mnt/datafs/testfile

MVAPICH2 provides TotalView support. The following commands provide an example of how to build and run your application with TotalView support. Note: running TotalView requires correct setup in your environment, if you encounter any problem with your setup, please check with your system administrator for help.

All MPI2-functions of MVAPICH2 support the MPI profiling interface. This allows MVAPICH2 to be used

by a variety of profiling libraries for MPI applications.

Two use of profiling libraries will be describe below, Scalasca and mpiP;

Once the installation is done, you will be able to use Scalasca with MVAPICH2.

For more information about Scalasca and its features please visit Scalasca website.

Simply run your MPI application as usual. On running a mpi application, a file with mpiP extension gets created which contains the following information

Prerequisites to install mpiP library: mpiP library has the build dependency on following libraries. Usually, these libraries are installed along with the Linux installation. You may also download these libraries from the specified URLs and install them according to their README file.

Alternative to GNU binutils, libelf and libdwarf can be used for source lookup.

The sample configure command to build mpiP library is given below. Please refer to the mpiP web page for commands specific to your system environment.

./configure LDFLAGS=-L/usr/lib64 LIBS=‘‘-lbfd -liberty’’ --enable-collective-report-default --enable-demangling=GNU --with-cc=mpicc --with-cxx=mpiCC --with-f77=mpif77

To compile the file example.c, use the mpicc built in the Singularity environment as described in

Section 4.15.

$ singularity exec Singularity-Centos-7.img mpicc /path/to/example.c -o

example

The MPI binary (example) can be installed into the container at /usr/bin as follows.

$ sudo singularity copy Singularity-Centos-7.img ./example /usr/bin/

Run the MPI program within the container by using the mpirun_rsh job launcher on the host. As with

the native MVAPICH2 version, different features can be enabled through the use of runtime

variables.

$ mpirun_rsh -np #procs -hostfile hostfile MV2_IBA_HCA=mlx4_0 singularity exec Singularity-Centos-7.img /usr/bin/example

In this section, we present the usage instructions for advanced features provided by MVAPICH2.

In MVAPICH2 2.3.7, run-time variables are used to switch various optimization schemes on and off. Following is a list of optimizations schemes and the control environmental variables, for a full list please refer to the section 11:

Traditionally with mpirun_rsh you have to specify all environment variables that you want visible to the remote MPI processes on the command line. With the –export option of mpirun_rsh this is no longer necessary.

Please note that the -export option does not overwrite variables that are normally already set when you first ssh into the remote node. If you want to export all variables including the ones that are already set you can use the -export-all option.

MVAPICH2 supports the use of configuration values to ease the burden of users when they would like to set and repeatedly use many different environment variables. These can be stored in a configuration file with statements of the form “VARIABLE = VALUE”. Full line comments are supported and begin with the “#” character.

The system configuration file can be placed at /etc/mvapich2.conf while user configuration files are located at “~/.mvapich2.conf” by default. The user configuration file can be specified at runtime by MV2_USER_CONFIG if the user would like to have mvapich2 read from a different location.

The processing of these files can be disabled by the use of the MV2_IGNORE_SYSTEM_CONFIG and MV2_IGNORE_USER_CONFIG.

Run with blocking mode enabled

Do not use user configuration file

MVAPICH2 can be suspended and resumed when using a process launcher that catches and forwards the appropriate signals.

For example, when using mpirun_rsh you can type Ctrl-Z (or send the SIGTSTP signal) at the terminal and the job will suspend. You can then later send the SIGCONT signal to the job and it will continue.

MVAPICH2-CH3 interfaces support architecture specific CPU mapping through the Portable Hardware Locality (hwloc) software package. By default, the HWLOC sources are compiled and built while the MVAPICH2 library is being installed. Users can choose the “–disable-hwloc” parameter while configuring the library if they do not wish to have the HWLOC library installed. However, in such cases, the MVAPICH2 library will not be able to perform any affinity related operations.

There are two major schemes as indicated below. To take advantage of any of these schemes,

the jobs need to run with CPU affinity (MV2_ENABLE_AFFINITY) and shared memory

(MV2_USE_SHARED_MEM) turned on (default). If users choose to set these run-time parameters to 0,

then the kernel takes care of mapping processes to cores and none of these schemes will be

enabled.

To report the process mapping, users can set the environment variable MV2_SHOW_CPU_BINDING to 1 (Section 10.7).

Under this scheme, the HWLOC tool will be used at job-launch time to detect the processor’s micro-architecture, and then generate a suitable cpu mapping string based. Three policies are currently implemented: “bunch”, “scatter”, and “hybrid”. By default, we choose to use the “hybrid” mapping. However, we also allow users to choose a binding policy through the run-time variable, MV2_CPU_BINDING_POLICY. (Section 11.14)

For example, if you want to run 4 processes per node and utilize “bunch” policy on each node, you can specify:

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_POLICY=bunch ./a.out

The CPU binding will be set as shown in Figure 2.

If you want to run 4 processes per node and utilize “scatter” policy on each node, you can specify:

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_POLICY=scatter ./a.out

The CPU binding will be set as shown in Figure 3.

If two applications with four processes each need to share a given node (with eight cores) at the same time with “bunch” policy, you can specify:

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_POLICY=bunch ./a.out

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_POLICY=bunch ./b.out

The CPU binding will be set as shown in Figure 4.

If two applications with four processes each need to share a given node (with eight cores) at the same time with “scatter” policy, you can specify:

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_POLICY=scatter ./a.out

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_POLICY=scatter ./b.out

The CPU binding will be set as shown in Figure 5.

The aforementioned binding is based on the core level, meaning each MPI process will be bound to a specific core. Actually, we provide different process binding level. There are three binding levels: “core”, “socket”, and “numanode” (which is designed for some multicore processor with NUMA node unit). We use the “core” as the default binding level, and we also allow users to choose a binding level through the run-time variable, MV2_CPU_BINDING_LEVEL. (Section 11.16) For example, if you want to run 4 processes per node and utilize “socket” as the binding level on each node, you can specify:

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_LEVEL=socket ./a.out

The CPU binding will be set as shown in Figure 6. Note: because we use “bunch” as the default binding policy, all four processes will be bound to the first socket and each of them can use all four cores in this socket. When the binding policy is “bunch” and the binding level is “socket”, processes will be bound to the same socket until the process number is larger than the core number in the socket.

If you want to run 4 processes per node, utilize “socket” as the binding level and “scatter” as the binding policy, you can specify:

$ mpirun_rsh -np 4 -hostfile hosts MV2_CPU_BINDING_LEVEL=socket MV2_CPU_BINDING_POLICY=scatter ./a.out

The CPU binding will be set as shown in Figure 7.

We have introduced one additional policy called “hybrid” for MV2_CPU_BINDING_POLICY variable. This policy can also be used for hybrid MPI+Threads (OpenMP, pthreads, etc.) applications where each MPI rank additionally spawns multiple threads. The detailed usage of this variable and any additional variables you might need is discussed in Section 6.20.

In addition to MV2_CPU_BINDING_POLICY, we have also provided a new environment variable called MV2_HYBRID_BINDING_POLICY to specify thread specific binding policies. The detailed description and usage of this variable is explained in Section 6.21.

Under the second scheme, users can also use their own mapping to bind processes to CPU’s on modern multi-core systems. The feature is especially useful on multi-core systems, where performance may be different if processes are mapped to different cores. The mapping can be specified by setting the environment variable MV2_CPU_MAPPING (Section 11.13).

For example, if you want to run 4 processes per node and utilize cores 0, 1, 4, 5 on each node, you can specify:

$ mpirun_rsh -np 64 -hostfile hosts MV2_CPU_MAPPING=0:1:4:5 ./a.out

or

$ mpiexec -n 64 -f hosts -env MV2_CPU_MAPPING 0:1:4:5 ./a.out

In this way, process 0 on each node will be mapped to core 0, process 1 will be mapped to core 1, process 2 will be mapped to core 4, and process 3 will be mapped to core 5. For each process, the mapping is separated by a single “:”.

MVAPICH2 supports binding one process to multiple cores in the same node with “,” or “-”. For example:

$ mpirun_rsh -np 64 -hostfile hosts MV2_CPU_MAPPING=0,2,3,4:1:5:6 ./a.out

or

$ mpirun_rsh -np 64 -hostfile hosts MV2_CPU_MAPPING=0,2-4:1:5:6 ./a.out

In this way, process 0 on each node will be mapped to core 0, core 2, core 3, and core 4; process 1 will be mapped to core 1, process 2 will be mapped to core 5, and process 3 will be mapped to core 6. This feature is designed to support the case that one rank process will spawn multiple threads and set thread binding in the program.

Here we provide a table with latency performance of 0 byte and 8KB messages using different CPU mapping schemes. The results show how process binding can affect the benchmark performance. We strongly suggest the consideration of best CPU mapping on multi-core platforms when carrying out benchmarking and performance evaluation with MVAPICH2.

The following measurements were taken on the machine with the dual quad-core 2.53GHz Intel Xeon processors with 12MB L3 shared cache (among cores in one socket). MVAPICH2-2.3.7 was built with gcc-4.4.6 and default configure arguments:

Message Latency | |||

| Core Pair | 0-byte | 8k-byte | Notes

|

| 1,2 | 0.17 us | 1.83 us | same socket, shared L3 cache, best performance |

| 0,1 | 0.17 us | 1.87 us | same socket, shared L3 cache, but core 0 handles interrupts |

| 1,5 | 0.41 us | 3.16 us | different sockets |

| 0,4 | 0.42 us | 3.17 us | different sockets, but core 0 handles interrupts |

MVAPICH2 CH3-based interfaces support LiMIC2 for intra-node communication for medium and large messages to get higher performance. LiMIC2 is also used to optimize intra-node one-sided communication in OFA-IB-CH3 and OFA-iWARP-CH3 interfaces. It is disabled by default because it depends on the LiMIC2 package to be previously installed. As a convenience we have distributed the latest LiMIC2 package (as of this release) with our sources.

To install this package, please take the following steps.

Before using LiMIC2 you’ll need to load the kernel module. If you followed the instructions above you can do this using the following command (LSB init script).

Please note that supplying ‘--sysconfdir=/etc’ in the configure line above told the package to install the init script and an udev rule in the standard location for system packages. Supplying ‘--prefix=/usr’ will also install the headers and libraries in the system path. These are optional but recommended.

Now you can use LiMIC2 with MVAPICH2 by simply supplying the ‘--with-limic2’ option when configuring MVAPICH2. You can run your applications as normal and LiMIC2 will be used by default. To disable it at run time, use the env variable:

$ mpirun_rsh -np 64 -hostfile hosts MV2_SMP_USE_LIMIC2=0 ./a.out

In MVAPICH2, support for shared memory based collectives has been enabled for MPI applications running over OFA-IB-CH3, OFA-iWARP-CH3, TrueScale (PSM-CH3) and Omni-Path (PSM2-CH3) interfaces. Currently, this support is available for the following collective operations:

Optionally, these feature can be turned off at run time by using the following parameters:

Please refer to Section 11 for further details.

In MVAPICH2, support for intra-node topology aware collectives is enabled by default. This feature can be toggled using MV2_ENABLE_TOPO_AWARE_COLLECTIVES (section 11.131). It requires shared memory collectives to be enabled (section 11.93). Supported interfaces include OFA-IB-CH3, OFA-iWARP-CH3, TrueScale (PSM-CH3) and Omni-Path (PSM2-CH3). Currently, this support is available for the following collective operations:

Run-time parameters exist to toggle each one of the supported collective operations. The parameters are :

MVAPICH2 also provides support for topology-aware allreduce with SHArP support for inter-node operations by default. Please refer to Section 6.27 for more details on how to enable this feature.

Please refer to Section 11 for further details.

In MVAPICH2, support for multicast based collectives has been enabled for MPI applications running over OFA-IB-CH3 interface. Currently, this support is available for the following collective operations:

This feature is enabled by default. This can be toggled at runtime by using parameter

MV2_USE_MCAST (section 11.119). This feature is effective when the MPI job is running on more than

the threshold

MV2_MCAST_NUM_NODES_THRESHOLD (section 11.120) number of nodes.

Both RDMA_CM and libibumad based multicast group setup schemes are supported.

RDMA_CM based multicast group setup (enabled by default) can be toggled using the

MV2_USE_RDMA_CM_MCAST (section 11.134) run-time parameter.

If RDMA_CM based mutlicast group setup is disabled/cannot be used, multicast requires the cluster to be installed with libibumad and libibmad libraries as well as have read/write permission for users on /dev/infiniband/umad0 to function properly.

If neither RDMA_CM nor libibumad based group setup for multicast are viable options, then the feature can be disabled using the –disable-mcast configure flag.

In MVAPICH2, we offer new intra-node Zero-Copy designs (using LiMIC2) for the MPI_Gather collective operation based on the LiMIC2 feature. This feature can be used, when the library has been configured to use LiMIC2( 6.6). This feature is disabled by default and can be turned on at runtime by using the parameter MV2_USE_LIMIC_GATHER ( 11.118).

MVAPICH2 has scalable design with InfiniBand connection less transport Unreliable Datagram (UD). Applications can use UD only transport by simply configuring MVAPICH2 with the –enable-hybrid (which is enabled by default) and setting the environment variable MV2_USE_ONLY_UD ( 11.116). In this mode, library does not use any reliable RC connections. This feature eliminates all the overheads associated with RC connections and reduces the memory footprint at large scale.

MVAPICH2 has integrated hybrid transport support for OFA-IB-CH3. This provides the capability to use Unreliable Datagram (UD), Reliable Connection (RC) and eXtended Reliable Connection (XRC) transports of InfiniBand. This hybrid transport design is targeted at emerging clusters with multi-thousand core clusters to deliver best possible performance and scalability with constant memory footprint.

Applications can use Hybrid transport by simply configuring MVAPICH2 with the –enable-hybrid option. In this configuration, MVAPICH2 seamlessly uses UD and RC/XRC connections by default. The use of UD transport can be disabled at run time by setting the environment variable MV2_USE_UD_HYBRID( 11.115) to Zero.

MV2_HYBRID_ENABLE_THRESHOLD ( 11.110) defines the threshold for enabling the hybrid transport. Hybrid mode will be used when the size of the job is greater than or equal to the threshold. Otherwise, it uses default RC/XRC connections.

For a full list of Hybrid environment variables, please refer Section 11.

MVAPICH2 has integrated multi-rail support for OFA-IB-CH3 and OFA-iWARP-CH3 interfaces. Run-time variables are used to specify the control parameters of the multi-rail support; number of adapters with MV2_NUM_HCAS (section 11.33), number of ports per adapter with MV2_NUM_PORTS (section 11.35), and number of queue pairs per port with MV2_NUM_QP_PER_PORT (section 11.37). Those variables are default to 1 if you do not specify them.

Large messages are striped across all HCAs. The threshold for striping is set according to the following

formula:

(MV2_VBUF_TOTAL_SIZE × MV2_NUM_PORTS × MV2_NUM_QP_PER_PORT × MV2_NUM_HCAS).

In addition, there is another parameter MV2_STRIPING_THRESHOLD (section 11.70) which users can

utilize to set the striping threshold directly.

MVAPICH2 also gives the flexibility to balance short message traffic over multiple HCAs in a multi-rail configuration. The run-time variable MV2_SM_SCHEDULING can be used to choose between the various load balancing options available. It can be set to USE_FIRST (Default) or ROUND_ROBIN. In the USE_FIRST scheme, the HCA in slot 0 is always used to transmit the short messages. If ROUND_ROBIN is chosen, messages are sent across all HCAs alternately.

In the following example, we can use multi-rail support with two adapters, using one port per adapter

and one queue pair per port:

$ mpirun_rsh -np 2 n0 n1 MV2_NUM_HCAS=2 MV2_NUM_PORTS=1

MV2_NUM_QP_PER_PORT=1 prog

Using the Hydra process manager, the same can be accomplished by:

$ mpiexec -n 2 -hosts n0,n1 -env MV2_NUM_HCAS 2 -env MV2_NUM_PORTS 1 -env

MV2_NUM_QP_PER_PORT 1 prog

Note that the default values of MV2_NUM_PORTS and MV2_NUM_QP_PER_PORT are 1, so they can be omitted.

$ mpirun_rsh -np 2 n0 n1 MV2_NUM_HCAS=2 prog

Using the Hydra process launcher, the following command can be used:

$ mpiexec -n 2 -hosts n0,n1 -env MV2_NUM_HCAS 2 prog

The user can also select the particular network card(s) that should be used by using the MV2_IBA_HCA environment variable specified in section 11.25. The following is an example of how to run MVAPICH2 in this mode. (In the example “mlx4_0” is the name of the InfiniBand card as displayed by the output of the “ibstat” command).

$ mpirun_rsh -np 2 n0 n1 MV2_IBA_HCA=mlx4_0 prog

If there are multiple HCAs in the system, the user can also selectively use some or all of these HCAs for network operations by using the MV2_IBA_HCA environment variable. Different HCAs are delimited by colons “:”. An example is shown below. In the example “mlx4_0” and “mlx4_1” are the names of the InfiniBand card as displayed by the output of the “ibstat” command. There can be other HCAs in the system as well.

$ mpirun_rsh -np 2 n0 n1 MV2_IBA_HCA=mlx4_0:mlx4_1 prog

MVAPICH2 now features an enhanced design for multiple rail configurations for OFA-IB-CH3 and OFA-iWARP-CH3 interfaces. It can broadly be explained by the figure given below. In addition to the earlier design where the rails were shared among processes at run time (as depicted under the Rail Sharing banner in the figure below), MVAPICH2 now features a new RAIL BINDING policy which will dedicate a particular rail to a particular process.

The scheduling policies are broadly classified into 2 basic types. Rail Binding and Rail Sharing.

The user defined policies can be set in the following manner by giving appropriate values to the parameter MV2_PROCESS_TO_RAIL_MAPPING (section 11.40)

MVAPICH2 provides system-level rollback-recovery capability based on a coordinated Checkpoint-Restart protocol involving all the application processes.

The following section ( 6.15.1) provides instructions for basic checkpoint/restart operations with MVAPICH2. These require the Berkeley Lab Checkpoint/Restart (BLCR) library in order perform checkpoints of local processes. Then, advanced features are presented. Section 6.15.1 details the usage of the fast-checkpointing scheme based on aggregation. Section 6.15.1 present the support of the new standardized Fault Tolerance Backplane (FTB).

Basic Checkpoint/Restart Scheme: BLCR is a library that allows to take checkpoint of individual processes. Its usage is mandatory to take advantage of the checkpoint/restart functionality in MVAPICH2. Here are the steps that allows the usage of BLCR with MVAPICH2.

Users are strongly encouraged to read the Administrators guide of BLCR, and test the BLCR on the target platform, before using the checkpointing feature of MVAPICH2.

Checkpointing operation

Now, your system is set up to use the Checkpoint/Restart features of MVAPICH2. Several parameters are provided by MVAPICH2 for flexibility in configuration and using the Checkpoint / Restart features. If mpiexec is used as the job start up mechanism, these parameters need to be set in the user’s environment through the BASH shell’s export command, or the equivalent command for other shells. If mpirun_rsh is used as the job start up mechanism, these parameters need to be passed to mpirun_rsh through the command line.

In order to provide maximum flexibility to end users who wish to use the checkpoint/restart features of MVAPICH2, we have provided three different methods that can be used to take checkpoints during the execution of the MPI application. These methods are described as follows:

where PID is the process id of the mpiexec or mpirun_rsh process. In order to simplify the process, the script mv2_checkpoint can be used. This script is available in the same directory as mpiexec and mpirun_rsh.

Restart operation

To restart a job from a manual checkpoint, users need to issue another command of BLCR,

“cr_restart” with the checkpoint file name of the MPI job console as the parameter. Usually, this file is

named

context.<pid>. The checkpoint file name of the MPI job console can be specified when issuing the

checkpoint (see the “cr_checkpoint --help” for more information). Please note that the names of

checkpoint files of the MPI processes will be assigned according to the environment variable

MV2_CKPT_FILE,

($MV2_CKPT_FILE.<number of checkpoint>.<process rank>).

To restart a job from an automatic checkpoint, use cr_restart $MV2_CKPT_FILE.<number of checkpoint>.auto.

If the user wishes to restart the MPI job on a different set of nodes, the host file that was specified along with the “-hostfile” option during job launch phase should be modified accordingly before trying to restart a job with “cr_restart”. This modified “hostfile” must be at the same location and with the same file name as the original hostfile. The mpirun_rsh framework parses the host file again when trying to restart from a checkpoint, and launches the job on the corresponding nodes. This is possible as long as the nodes in which the user is trying to restart has the exact same environment as the one in which the checkpoint was taken (including shared NFS mounts, kernel versions, and user libraries).

For this to function correctly, the user should disable pre-linking on both the source and the destination node. See the FAQ Section of the BLCR userguide for more information.

Please refer to the Section 9.6 for troubleshooting with Checkpoint/Restart.

Write-Aggregation based Fast Checkpointing Scheme: MVAPICH2 provides an enhanced technique that allows fast checkpoint and restart. This scheme, named Aggregation, relies on the Filesystem in Userspace (FUSE) library.

Although Aggregation is optional, its usage is recommended to achieve best performances during checkpoint and restart operations. That is why, if the FUSE library is detected during configuration step, it will be automatically enabled (see section 4.4). Once enabled at configuration step, aggregation scheme can be disabled at run time by setting the environment variable MV2_CKPT_USE_AGGREGATION=0 (see section 11.8 for details).

The following steps need to be done to use FUSE library for aggregation scheme.

If write aggregation has been enabled at configuration time, MVAPICH2 will check the FUSE configuration of each node during startup (FUSE module loaded and fusermount command in the PATH). If one node is not properly configured, then MVAPICH2 will abort. In this case, you need to fix the FUSE configuration of the nodes, or disable aggregation using MV2_CKPT_USE_AGGREGATION=0 to run MVAPICH2.

Fault Tolerance Backplane (FTB) support: MVAPICH2 supports the new standardized Fault Tolerance Backplane (FTB). FTB can be used for Checkpoint-Restart and Job Pause-Migration-Restart Frameworks. Activating FTB support is optional to perform checkpoint/restart operations, but it will allow MVAPICH2 to automatically publish and listen to standardized events through the Fault Tolerance Backplane.

FTB has been developed and standardized by the CIFTS project. It enables faults to be handled in a coordinated and holistic manner in the entire system, providing for an infrastructure which can be used by different software systems to exchange fault-related information.

If using the FTB framework for checkpoint/restart, the following steps need to be done in addition to the above nodes.

Checkpoint/Restart support for the OFA-IB-Nemesis Interface: MVAPICH2 also provides Checkpoint-Restart capabilities for the OFA-IB-Nemesis interface. Currently, this feature can only be used in conjunction with the Hydra process manager. More details on using Hydra to checkpoint an MPI application can be found here.

The Scalable Checkpoint-Restart (SCR) library developed at the Lawrence Livermore National Laboratory (LLNL) enables MPI applications to utilize distributed storage on Linux clusters to attain high file I/O bandwidth for checkpointing and restarting large-scale jobs. With SCR, jobs run more efficiently, lose less work upon a failure, and reduce load on critical shared resources such as the parallel file system and the network infrastructure.

In the current SCR implementation, application checkpoint files are cached in storage local to the compute nodes, and a redundancy scheme is applied such that the cached files can be recovered even after a failure disables part of the system. SCR supports the use of spare nodes such that it is possible to restart a job directly from its cached checkpoint, provided the redundancy scheme holds and provided there are sufficient spares.

The SCR library implements three redundancy schemes which trade of performance, storage space, and reliability:

SCR is integrated into MVAPICH2 to provide Multi-Level checkpointing capabilities to MPI applications in two modes: application-aware mode and transparent mode. The following sub-sections illustrate the steps needed to checkpoint an application using these two schemes.

Application-Aware Multi-Level Checkpointing: In this case, it is assumed that the application knows what data to checkpoint and how to read data from a checkpoint during a restart. It is also assumed that each process writes its checkpoint data in a unique file. For an application to actually write a checkpoint using SCR, the following steps need to be followed.

The following code snippet describes the checkpointing function of a sample MPI application that writes checkpoints using SCR:

The following code snippet describes the SCR-assisted restart mechanism for a sample MPI application:

For more instructions on integrating the SCR APIs into an MPI application, and for information about the various runtime parameters that can be set by the user, please refer to the SCR Userguide.

Transparent Multi-Level Checkpointing :

The benefits of SCR can also be leveraged by applications that do not employ their own Checkpoint-Restart mechanism. The SCR-MVAPICH2 integration makes this possible by using SCR to manage the checkpoints generated by BLCR the basic system-level Checkpointing scheme described in Section 6.15.1. Once MVAPICH2 has been configured with SCR, the steps required to checkpoint and restart a job transparently using BLCR and SCR are the same as what is described in Section 6.15.1. MVAPICH2 uses SCR’s APIs internally to transparently manage checkpoint storage efficiently.

For information about the various SCR-specific runtime parameters that can be set by the user, and for detailed information about the redundancy schemes employed by SCR, please refer to the SCR Userguide.

MVAPICH2 provides a node-level Job Pause-Migration-Restart mechanism for the OFA-IB-CH3 interface, which can be used to migrate MPI processes on a given failing node to a healthy node, selected from a pool of spare hosts provided by the user during job-launch.

This Job Migration framework relies on BLCR and FTB libraries. See subsections in 6.15.1 to set up these libraries. The source and target nodes are required to have these libraries installed in the same path.

The Job Migration framework makes use of BLCR to take a checkpoint of all MPI processes running

on the failing node in question. Users are strongly recommended to either disable “prelinking” feature, or

execute the following command:

$ prelink --undo --all

on all nodes before starting a job that will later be migrated. Please refer to this BLCR web page for complete information: BLCR FAQ.

During job-launch, the list of spare hosts can be provided as input to mpirun_rsh using the -sparehosts option which takes a hostfile as argument.

The following example illustrates a sample MPI job being launched to run with job migration

support:

$ mpirun_rsh -np 4 -hostfile ./hosts -sparehosts

./spare_hosts ./prog

where spare_hosts is a file which contains a list of healthy spare hosts that is needed by the job migration framework.

An actual migration of MPI processes from a failing source node to a target spare node can be triggered using one of two methods - using signals or using the mv2_trigger utility.

For the signal-triggered method, users can manually initiate the migration protocol by issuing a

SIGUSR2 signal to the mpispawn processes running on the source node. This can be done using the

following command:

$ pkill -SIGUSR2 mpispawn

The migration protocol can also be triggered using the simple utility provided with MVAPICH2:

$ $PREFIX/bin/mv2_trigger src

where src is the hostname of the health-deteriorating node from which all MPI processes need to be migrated.

Please note, that the ftb_agent daemon will have to be launched on all the compute nodes and the spare nodes before launching the MPI application, in order to successfully migrate MPI processes. See section 6.15.1 for information about setting up the FTB infrastructure.

MVAPICH2 provides support for run-through stabilization wherein communication failures are not treated as fatal errors. On enabling this capability, MVAPICH2 returns the appropriate error code to a user-set error handler in the event of a communication failure, instead of terminating the entire job. When a process detects a failure when communicating with another process, it will consider the other process as having failed and will no longer attempt to communicate with that process. The user can, however, continue making communication calls to other processes. Any outstanding send or receive operations to a failed process, or wild-card receives (i.e., with MPI_ANY_SOURCE) posted to communicators with a failed process, will be immediately completed with an appropriate error code.

Currently, this support is available only for the OFA-IB-Nemesis and TCP/IP-Nemesis interfaces, when the Hydra process manager is used.

It can be enabled at run-time by:

MVAPICH2 supports network fault recovery by using InfiniBand Automatic Path Migration (APM) mechanism for OFA-IB-CH3 interface. This support is available for MPI applications using OpenFabrics stack and InfiniBand adapters.

To enable this functionality, a run-time variable, MV2_USE_APM (Section 11.72) can be enabled, as

shown in the following example:

$ mpirun_rsh -np 2 n0 n1 MV2_USE_APM=1 ./cpi

or

$ mpiexec -n 2 -hosts n0,n1 -env MV2_USE_APM 1 ./cpi

MVAPICH2 also supports testing Automatic Path Migration in the subnet in the absence of network

faults. This can be controlled by using a run-time variable MV2_USE_APM_TEST (Section 11.73). This

should be combined with MV2_USE_APM as follows:

$ mpirun_rsh -np 2 n0 n1 MV2_USE_APM=1 MV2_USE_APM_TEST=1 ./cpi

or

$ mpiexec -n 2 -hosts n0,n1 -env MV2_USE_APM 1 -env MV2_USE_APM_TEST 1

./cpi

In MVAPICH2, for using RDMA CM the run time variable MV2_USE_RDMA_CM needs to be used as described in 11. This applies to OFA-IB-CH3, OFA-iWARP-CH3 and OFA-RoCE-CH3 interfaces.

In addition to these flags, all the systems to be used need the following one time setup for enabling RDMA CM usage.

Programs can be executed as follows:

$ mpirun_rsh -np 2 n0 n1 MV2_USE_RDMA_CM=1 prog

or

$ mpiexec -n 2 -hosts n0,n1 -env MV2_USE_RDMA_CM 1 prog

MVAPICH2 relies on RDMA_CM to establish connections with peer processes.

The environment variables MV2_RDMA_CM_MULTI_SUBNET_SUPPORT (described in Section 11.84) and MV2_USE_RDMA_CM (described in Section 11.83) must be set to 1 (default value is 0) to enable the multi-subnet support in MVAPICH2.

The multi-subnet support in MVAPICH2 relies on the GID of the underlying InfiniBand HCA to establish communication with peer processes. In some scenarios, the GID may be populated in non-default indices in the GID table resulting in failure when trying to establish communication. In such scenarios, users can take advantage of the MV2_DEFAULT_GID_INDEX environment variable (described in Section 11.82) to point to the correct index in the GID table.

Programs can be executed as follows if GID is populated in the default location:

$ mpirun_rsh -np 2 n0 n1 MV2_USE_RDMA_CM=1

MV2_RDMA_CM_MULTI_SUBNET_SUPPORT=1 prog

If the GID is populated in a non-default location (e.g. 1), programs can be executed as

follows:

$ mpirun_rsh -np 2 n0 n1 MV2_USE_RDMA_CM=1

MV2_RDMA_CM_MULTI_SUBNET_SUPPORT=1 MV2_DEFAULT_GID_INDEX=1 prog

MVAPICH2 binds processes to processor cores for optimal performance. Please refer to Section 6.5 for more details. However, in multi-threaded environments, it might be desirable to have each thread compute using a separate processor core. This is especially true for OpenMP+MPI programs.

In MVAPICH2, processor core mapping is turned off in the following way to enable the application in MPI_THREAD_MULTIPLE threading level if user requested it in MPI_Init_thread. Otherwise, applications will run in MPI_THREAD_SINGLE threading level.

$ mpirun_rsh -np 2 n0 n1 MV2_ENABLE_AFFINITY=0 ./openmp+mpi_app

For QLogic PSM Interface,

$ mpirun_rsh -np 2 n0 n1 MV2_ENABLE_AFFINITY=0 IPATH_NO_CPUAFFINITY=1 ./openmp+mpi_app

Further, to get better performance for applications that use MPI + OpenMP, we recommend binding the OpenMP threads to the processor cores. This can potentially avoid cache effects due to unwanted thread migration. For example, if we consider a Quad-Core processor, with 1 MPI process and 4 OpenMP threads, we recommend binding OpenMP thread 0 to core 0, OpenMP thread 1 to core 1 and so on. This can be achieved by setting the kernel affinity via the following compiler-specific environment variables:

Intel Compilers: On Intel processors, we recommend using the KMP_AFFINITY run-time flag in the following manner:

$ mpirun_rsh -hostfile hostfile -np 1 MV2_ENABLE_AFFINITY=0

OMP_NUM_THREADS=4

KMP_AFFINITY=warnings,compact ./a.out

For more information, please refer to:

http://software.intel.com/sites/products/documentation/studio/

composer/en-us/2011Update/compiler_c/optaps/common/optaps_openmp_thread_affinity.htm

GNU compilers: On both Intel and AMD processors, we recommend using the

GOMP_CPU_AFFINITY run-time flag, in the following manner:

$ mpirun_rsh -hostfile hostfile -np 1 MV2_ENABLE_AFFINITY=0 OMP_NUM_THREADS=4 GOMP_CPU_AFFINITY="0,1,2,3" ./a.out

For more information, please refer to:

(http://gcc.gnu.org/onlinedocs/libgomp/GOMP_005fCPU_005fAFFINITY.html)

We recommend setting the following environment variables:

MV2_CPU_BINDING_POLICY=hybrid

MV2_THREADS_PER_PROCESS=<number of threads per process>

If MV2_THREADS_PER_PROCESS is not specified, OMP_NUM_THREADS is used to

determine the CPU mapping. One core per thread will be allocated to the process. For good

performance, we recommend setting MV2_THREADS_PER_PROCESS equal to or more than

OMP_NUM_THREADS.

Example with 8 MPI processes and 4 OpenMP threads per process:

$ mpirun_rsh -hostfile hostfile -np 8 OMP_NUM_THREADS=4

MV2_CPU_BINDING_POLICY=hybrid MV2_THREADS_PER_PROCESS=4 ./a.out

Knights Landing + Omni-Path Architecture: For systems with Knights Landing and

Omni-Path/PSM2 architecture, we recommend setting the following environment variable:

PSM2_KASSIST_MODE=none in addition.

Example with 8 MPI processes and 4 OpenMP threads per process:

$ mpirun_rsh -hostfile hostfile -np 8 OMP_NUM_THREADS=4

MV2_CPU_BINDING_POLICY=hybrid MV2_THREADS_PER_PROCESS=4

PSM2_KASSIST_MODE=none ./a.out

MV2_CPU_BINDING_POLICY is used to bind MPI ranks to processor cores. We have also introduced a new variable called MV2_HYBRID_BINDING_POLICY that offers several new policies for hybrid and thread specific binding in MPI and MPI+Threads execution environments. The possible bindings offered by this variable are “bunch”, “scatter”, “linear”, “compact”, “spread”, and “numa”.

linear — assigns cores to MPI rank and their corresponding threads linearly. For example, first rank followed by its threads, followed by second rank and its corresponding threads and so on.

Example with 8 MPI processes and 4 OpenMP threads per process on a 68-core KNL processor with “linear” binding policy for threads is shown below:

$ mpirun_rsh -hostfile hostfile -np 8 OMP_NUM_THREADS=4

MV2_CPU_BINDING_POLICY=hybrid MV2_THREADS_PER_PROCESS=4

MV2_HYBRID_BINDING_POLICY=linear ./a.out

This will assign ranks and their corresponding threads linearly.

compact — assigns all MPI ranks to physical cores first, and then starts assigning logical cores or hardware threads to each rank’s threads in linear fashion. This policy should only be used when the hyper-/hardware-multithreading is enabled and the user intends to map application threads to hardware threads.

Example with 8 MPI processes and 4 OpenMP threads per process on a 68-core KNL processor with “compact” binding policy for threads is shown below:

$ mpirun_rsh -hostfile hostfile -np 8 OMP_NUM_THREADS=4

MV2_CPU_BINDING_POLICY=hybrid MV2_THREADS_PER_PROCESS=4

MV2_HYBRID_BINDING_POLICY=compact ./a.out

This will assign one physical core per MPI rank. Since there are four hardware-threads per physical core in KNL, the four OpenMP threads of each rank will be mapped to the hardware threads of the corresponding core. The CPU binding will be set as shown in Figure 9.

Note — The correct use of this environment variable mandates that

MV2_CPU_BINDING_POLICY should be set to “hybrid” and MV2_THREADS_PER_PROCESS must

be a positive integer.

spread — This policy ensures that no two MPI ranks get bound to the same physical core. Moreover, this policy will equally distribute the resources (physical cores and hw/threads) among the MPI processes. It also ensures correct bindings on some clusters that have non-trivial vendor specific mappings.

Example with 8 MPI processes and 4 OpenMP threads per process on a 68-core KNL processor with “spread” binding policy for threads is shown below:

$ mpirun_rsh -hostfile hostfile -np 8 OMP_NUM_THREADS=4

MV2_CPU_BINDING_POLICY=hybrid MV2_THREADS_PER_PROCESS=4

MV2_HYBRID_BINDING_POLICY=spread ./a.out

This will assign one MPI rank per physical core avoiding resource contention caused by multiple MPI ranks getting bound to logical cores.

Note — The correct use of this environment variable mandates that

MV2_CPU_BINDING_POLICY should be set to “hybrid” and MV2_THREADS_PER_PROCESS must

be a positive integer.

numa — This policy allows for MPI ranks to get bound to different numa domains in a round-robin manner. For example, the first process will be bound to the first physical core of the first numa domain, the second process on to the first physical core of the second numa domain, and so on. It also ensures correct bindings on some clusters that have non-trivial vendor specific mappings.

Example with 16 MPI processes on an AMD EPYC 7551 processor with 8 numa domains with is shown below:

$ mpirun_rsh -hostfile hosts -np 16 MV2_CPU_BINDING_POLICY=hybrid

MV2_HYBRID_BINDING_POLICY=numa ./a.out

Each MPI rank is assigned to a different NUMA domain in a round-robin manner. The CPU binding will be set as shown in Figure 10.

Note — The correct use of this environment variable mandates that

MV2_CPU_BINDING_POLICY should be set to “hybrid” and MV2_THREADS_PER_PROCESS must

be a positive integer (already set by default). Furthermore, this policy is default on AMD EPYC systems

when more than two processes are used.

bunch — assigns cores to MPI ranks in the same way as “bunch” option described earlier. It additionally takes care of the vendor specific non-trivial core mappings. Further, it also ensures that the MPI ranks are bound to the physical cores only.

scatter — similar to “bunch”, this also assigns cores to MPI ranks in “scatter” option given to MV2_CPU_BINDING_POLICY. It additionally takes care of vendor specific non-trivial core mappings. It also ensures that the MPI ranks are only bound to the physical cores only.

Note — “bunch” and “scatter” options for MV2_HYBRID_BINDING_POLICY are recommended to be used as a solution to vendor specific non-trivial mapping issues. These should be considered an alternate to “bunch” or “scatter” given to CPU_BINDING_POLICY.

MVAPICH2 supports hot-spot and congestion avoidance using InfiniBand multi-pathing mechanism. This support is available for MPI applications using OFA-IB-CH3 interface.

To enable this functionality, a run-time variable, MV2_USE_HSAM (Section 11.78) can be enabled, as

shown in the following example:

$ mpirun_rsh -np 2 n0 n1 MV2_USE_HSAM=1 ./cpi

or

$ mpiexec -n 2 -hosts n0,n1 -env MV2_USE_HSAM 1 ./cpi

This functionality automatically defines the number of paths for hot-spot avoidance. Alternatively, the maximum number of paths to be used between a pair of processes can be defined by using a run-time variable MV2_NUM_QP_PER_PORT (Section 11.37).

We expect this functionality to show benefits in the presence of at least partially non-overlapping paths in the network. OpenSM, the subnet manager distributed with OpenFabrics supports LMC mechanism, which can be used to create multiple paths:

$ opensm -l4

will start the subnet manager with LMC value to four, creating sixteen paths between every pair of nodes.

MVAPICH2 CH3-based interface supports MPI communication using NVIDIA GPU device

memory with CUDA versions 4.0 or later. This feature removes the need for the application

developer to explicitly move the data from device memory to host memory before using MPI for

communication. The new support allows direct MPI communication from device memory to

device memory, device memory to host memory and host memory to device memory. It also

supports point-to-point and collective communication using contiguous and non-contiguous MPI

datatypes. It takes advantage of CUDA IPC for intra-node GPU-GPU communication (with CUDA

4.1).

For example, without CUDA support in the MPI library, a typical user might be using the following

sequence of commands to move data from a device memory to another device memory.

…

cudaMemcpy(host_buf, device_buf, size, cudaMemcpyDeviceToDevice);

MPI_Isend(host_buf, size, MPI_CHAR, 1, 100, MPI_COMM_WORLD, req);

…

With the support provided in MVAPICH2 and support of CUDA 4.0 (and later), the user can

achieve the same data movement operation by explicitly specifying MPI calls on device memory.

…

MPI_Isend(device_buf, size, MPI_CHAR, 1, 100, MPI_COMM_WORLD, req);

…

This support can be enabled by configuring MVAPICH2 with --enable-cuda and setting the environment variable MV2_USE_CUDA ( 11.121) to 1 during runtime.